Part 1: pictures the evolution of our investment case for SOL in broad strokes without the tech-speak

I’ll get right to the point and share the investment thesis with you. At DeepWaters, it’s our standard practice to provide a detailed memo for every validated decision, outlining the thought process behind it. Typically, this document includes a concise, leading sentence that captures the essence of the investment thesis. Drawing from my experience, I’ve found that most compelling investment opportunities can be summarized in 50 words or less. When it comes to Solana in 2023, the thesis was as follows:

“There is no other sufficiently decentralised system that has under meaningful duress proven to be likely capable of onboarding an application with 1 million daily users. That system is currently traded at 10x discount to ETH.”

That single sentence encapsulates the blood, sweat, and tears poured into months of tireless work by an entire office. Now, let’s see how you react to it:

- Dismissive or doubtful — “Aren’t all public blockchains more or less on the same level, with minor differences? Couldn’t the same be said about Ethereum Ecosystem or TON?”

- Outright rejection — “Oh, come on, don’t give me that BS about your meme-chain that goes failed on my Phantom, like, every second try.”

- Agreement — “You’re probably right, I largely agree with that assessment.”

- Indifferent — “Whatever, man, pump my bags.”

If you agreed with statement 3, you likely already understand the core concepts we’ll cover and the reasoning behind them. However, if you selected any of the other responses, that suggests you may not be fully familiar with how Solana’s design and capabilities differ from other blockchain networks. In that case, you could potentially gain new insights into the landscape of general-purpose blockchains by reading along with the current post series.

The North-Star Use Case

A great startup typically begins with outstanding founders. And for an outstanding founder, there’s probably nothing more important than having a crystal clear vision. As seasoned investors, we are quite accustomed to listening founders ranting about their projects “revolutionising the industry.”

When approached from first principles, those “revolutionising” designs appear to be cargo culting the existing solutions, mainly Tendermint modifications, with a couple of extra features that are clearly wrong to optimize for. All these systems typically end up being EVM-compatible appendices to the Ethereum base layer, suffering from cold liquidity and absence of users.

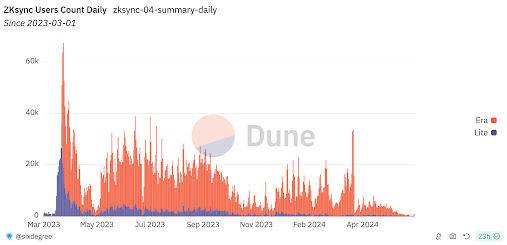

That was the case one cycle ago, and that remains to be the case nowadays, with even the most funded infrastructure going to the mainnet like this:

110 active wallets on ZkSync post TGE

Many such cases.

In our view, the root of these unproductive launches is not technical issues caused by lack of building talent (ZkSync guys are beyond any doubt outstandingly competent, top-notch builders). Instead, it stems from a fundamental misunderstanding of where building efforts should be directed.

Identifying the North-Star use case that will provide a competitive edge for your project is crucial, and early investors should focus intently on this aspect. Such opportunities are rare. Once you apply this filter to your deal pipeline, you'll be surprised at how few teams are genuinely worth your attention. Most of the decks you see feature people highlighting 27 problems they solve without pinpointing the ultimate use case that unlocks new oceans.

Anatoly Yakovenko started thinking about Solana in 2018. Back then, if you had asked Anatoly, “What’s the Solana mission?” he would probably have said something like, “To make sure that you and the Wintermute dude have no arbitrage for any news event across the world.”

We believe this approach is the most effective way to guide your startup. It provides proof of vision and enables meaningful valuation, helping determine whether the project aligns with your investment mandate or not.

Now, let’s unpack what that statement actually means and why it entails a whole bunch of impactful implications worth building towards. You can get an encapsulation from Anatoly himself, by the way:

watch from 5:22

Also, here you can catch Multicoin Capital CEO Kyle Samani discussing their discovery of Solana around 2018–2019. Ethereum was monumental, with its smart contracts paving the way for permissionless innovation atop a decentralized financial system. That was significant. However, the system lagged in speed. At that juncture, the entire crypto community was keenly anticipating Ethereum’s fresh vision for a scaling strategy, as it had become evident that “scaling” would be the buzzword of the decade. Yet, the solution to the scaling quandary remained elusive.

The general disappointment in Ethereum capabilities started picking up, thus giving way for the launch of alternative layer 1s, later called “alts”. It was around that time where things like Cardano, Tezos, EOS were getting funded. They were all pretty academic in nature. And that was the first contrast that stuck in the eye for the Multicoin team when they got to meet Anatoly Yakovenko and his team.

They were pragmatic individuals with a practical approach, with Anatoly had spent over a decade working at Qualcomm by then. Instead of attempting to reinvent computer science Complexity Theory, they opted not to start from scratch. They declared, “We can create a superior version of Ethereum.” As engineers with extensive experience in developing highly optimized software to facilitate high-frequency interactions within computer systems, they distinguished themselves from other teams as they explained the exact difference in proposed architecture alongside the trade-offs those will entail.

Another thing that differentiated them was the crystal-clear focus on one particular use case — that arbitrage eradication we mentioned earlier. From day one, Anatoly’s ultimate goal was to build an order book system on the blockchain. “Decentralized NASDAQ at the speed of light” was the original description Anatoly was offering — the fastest permissionless global order book in the world.

He was actively engaging with individuals at VC meetups and conferences during March and April of 2018 telling them about permissionless global finance powered by the decentralised order book onchain. The concept of DeFi was not yet established, and there was no indication of emerging platforms like Uniswap or Curve. The unique vision Anatoly presented was the driving force behind early backing from discerning investors. Only a handful of people truly understood the profound product-market fit of Anatoly’s proposed use case.

It’s funny how crypto, a heavily narrative-driven space, navigates ecosystems, labeling them according to the current hype or news agenda. Just as in 2024 the general public is being distracted by “Solana memecoins,” it was similarly distracted one cycle ago, dubbing Solana as “the chain for NFTs.” In reality, what was being developed under the hood has always been optimizing towards global permissionless finance executed via order book.

Again, it was never a matter of sheer competence (even though Solana teams are arguably the best high-frequency optimisers in the world), but rather a matter of the right focus. The lightning-fast order book is worth optimizing for because it unlocks superior DeFi (large TVL doesn’t), which in turn unlocks a gazillion of side cases such as minting assets dirt cheap and trading them right away (which allows for NFT and memecoin hype).

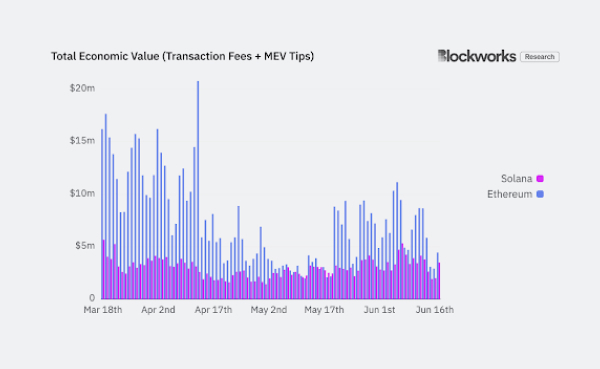

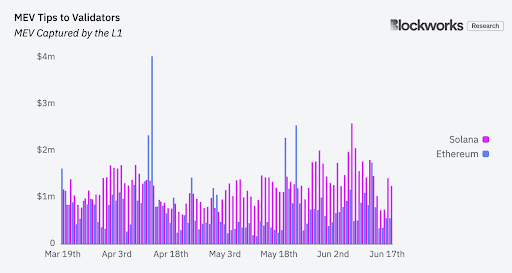

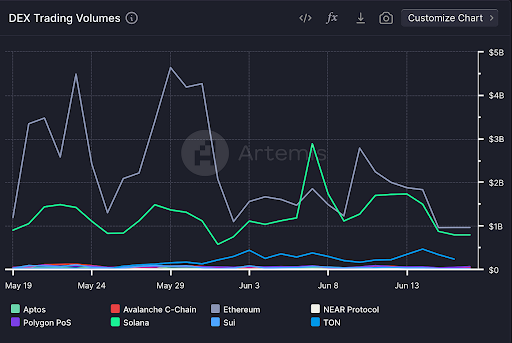

In 2024, the world begins to witness this use case unfolding as Solana flips Ethereum in DEX volumes, and Jito generously rewards validators. For many, the arrival and substantial airdrops of Solana DeFi teams came as a surprise. However, for those with clear focus, it was the inevitable outcome of the work done during the 2022–2023 bear cycle. The teams had a plan, executed it as intended, and investors were not surprised at all.

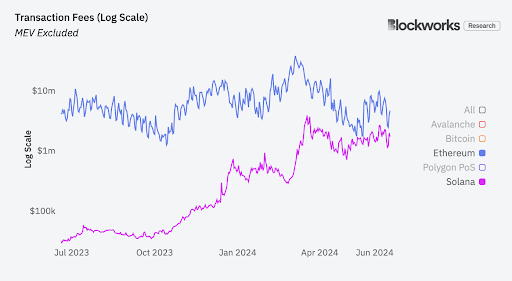

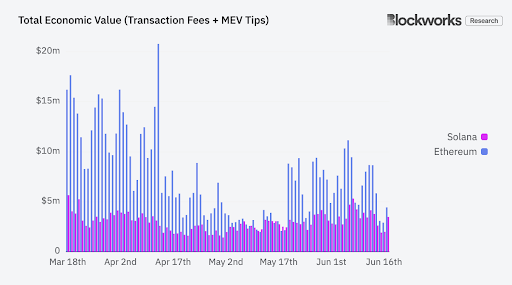

The current state of the network is such that it generates more and more transaction fees, rapidly closing the gap with Ethereum, while still charging its users several orders of magnitude less per transaction,

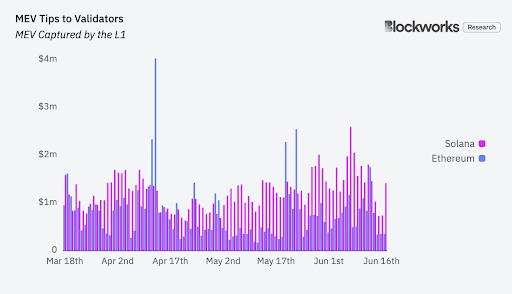

all the while delivering more extractable value to the network validators, further stimulating decentralisation as it becomes more lucrative to join.

kudos to the Blockworks team for priceless data

This is beginning to mold a new reality which, once again, was destined to materialise because the Solana team established the right perspective from day one and steadfastly adhered to it, doubling down at every opportunity.

It’s no wonder why so many TradFi professionals are gravitating towards Solana, out of all Layer 1s. For they recognise the compelling use case worth building for. Firms like Jump Trading and its FireDancer spin-off building for 1m TPS towards 2025 are excellent illustrations of this trend. The speed-of-light order book use case holds paramount importance for them. Consequently, there’s a notable presence of talented firmware engineers and teams with high-frequency trading backgrounds, dedicated to optimizing for speed and throughput.

Most of the other ecosystems out there are typically populated either by High Elves approaching development from university cabinets (the relatively good scenario, which sometimes leads to optimizing for the right things), or by outright fraudsters who barely understand the bottlenecks and necessary optimisations (the worst-case scenario, true for 99% of Layer1s). Solana stood out from day one due to its founders’ extensive background in optimizing for speed throughout their entire professional careers.

Speed optimisation had been their lifelong dedication. And this mission — NASDAQ onchain — has been the Solana Nord Star for the entirety of its road map. It never changed. Anatoly would come and say it, over and over again. No matter the cycle, no matter the price. In poverty, in prosperity. It is a perfect example of founder’s vision and focus.

Solana’s contrarian stance

The ultimate use case for public blockchains has largely centered around decentralized permissionless finance. However, the industry has tended to over-emphasise the term “decentralized” within this context, which is intuitively important but often lacks a clear, universally accepted definition.

No matter how you define it, there is a few systems that have achieved that “obviously”. In 2024, Ethereum surpassed 1 million 32ETH accounts in 2024, with approximately 10–15 thousand actively validating full nodes. However, the downside is that they have made significant sacrifices in terms of speed, which initially gave rise to the concept of “alt layer 1” aimed at overcoming the latency bottleneck.

Alternative layer 1s often opt for Tendermint BFT or its modifications which enable high-performance systems only for as long as the number of validators remains low, within the range of 100–200 full nodes. If you were to scale things like Cosmos Hub to the Ethereum-level decentralisation the system would probably collapse. Which is why many app-chains keep their environment permissioned and only allow for occasional expansions. Similarly, on the Ethereum L2 front, permissionless validation is not even attempted due to similar concerns.

*These scalability issues do not exclusively hinge on the consensus design and will be discussed in detail in the following posts of the series.

As a result, we now have the current status quo: a massive, slow financial layer with concentrated liquidity underneath a multitude of centralized execution environments competing for access to base layer liquidity. This leads to a fragmented user base and liquidity, giving rise to a plethora of startups that, in turn, attempt to solve these problems that arise from the original bottlenecks. This cycle perpetuates, with each new solution creating new challenges for the next generation of startups.

The original trade-offs made by Ethereum have led to a series of problems that can only be addressed by introducing another set of issues. These new challenges then necessitate similar solutions, perpetuating a cycle where one set of difficulties is traded for another. This vicious circle maintains momentum, prompting every participant in the ecosystem to justify substantial VC funding — ranging from 100 to 200 million dollars — under the pretext of solving critical issues.

Celestia (TIA) Private Funding Rounds, Token Sale Review & Tokenomics Analysis | CryptoRank.io

Discover fundraising information: Private Funding Rounds, return on investment (ROI), prices of investors, and funds…

We need Celestia DA layer for execution environments to upload data to the base layer with high level of economic security. $111m.

EigenLayer (EIGEN) Funding Rounds, Token Sale Review & Tokenomics Analysis | CryptoRank.io

Discover fundraising information: Funding Rounds, return on investment (ROI), prices of investors, and funds raised by…

We need Eigen DA for the same reasons + we can utilise the restaked ETH to spin up secure environment for new ideas (all of which also solve exclusively problems natural for the universe where everyone is optimising towards utilising ETH liquidity). $164m.

LayerZero (ZRO) Funding Rounds, Token Sale Review & Tokenomics Analysis | CryptoRank.io

Discover fundraising information: Funding Rounds, return on investment (ROI), prices of investors, and funds raised by…

We need to solve interoperability in order to secure composable communication across the hundreds of L2s. $263m.

That is not to dunk on the mentioned projects for optimising for the wrong things (we at DeepWaters are ourselves investors in one of them) but to highlight a legitimate question: are we even sure that we are going the right way? What if we got something wrong at some crossroad in the past?

The current consensus (called The Modular Thesis) is saying that we’ve reached the limit of scaling for each layer as a monolith so we need to dismember our blockchains so that each part is specialised on some single set of functions and then we can assemble those parts like a Lego castle. After all, that is the way public Internet works.

Solana is just about the only team in space that said in the beginning and has been saying all this time: the current modular design path we follow is essentially a monkey-job — we are optimising for the problems that should never have existed in the first place.

The ultimate modular outcome potentially results in the composable high-throughput environment. So… Why the detour? Why not just build a composable high-throughput environment on the base layer?

The main reason for “why not” has consistently been the consensus that it’s simply not feasible, particularly when combined with ultra-low transaction fees (where the network ostensibly wouldn’t generate sufficient revenue).

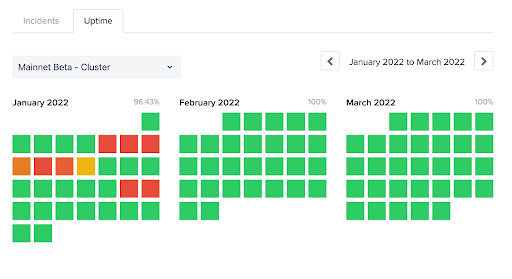

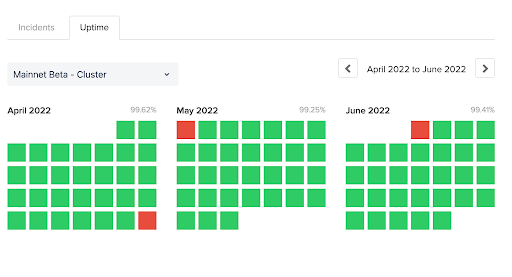

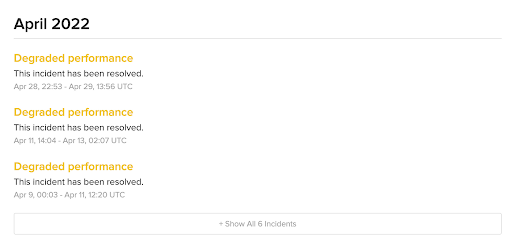

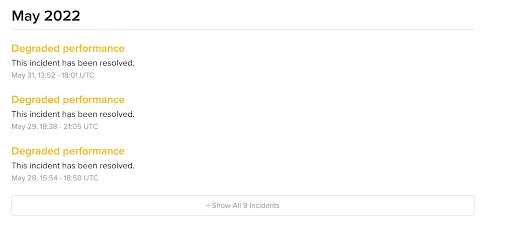

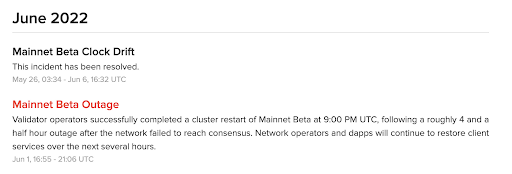

This consensus was largely solidified in 2022 when the first consumer dApps like STEPN launched on Solana, causing network congestion that overwhelmed its capacity. The blockchain would intermittently go down, halting block production or experiencing significant performance degradation. I would say that by Q2 2022, the industry appeared to have conclusively decided to pursue the modular approach and abandon vertical Layer 1 scaling through parallel execution, a path Solana vocally stuck with.

These performance issues have been a significant adoption barrier in terms of public crypto sentiment towards Solana, earning it the moniker “the downtime chain."

Yet, it was during that time when I first developed the prototype of my future Solana thesis. The question that troubled me was not “Why is Solana down?” but rather “Why did the apps choose to come here, of all places?” Previously, consumer app attempts typically gravitated towards chains like BNB or Polygon. So, why Solana now?

My simple assumption has always been that “mass adoption” can only come from the application layer. There should be dApps that onboard users who interact with the chain. Social media apps, creators’ economy, games, and above all global finance require:

- frequent interactions with users

- high economic velocity

Historical tx stats across major L1s. Source: Artemis

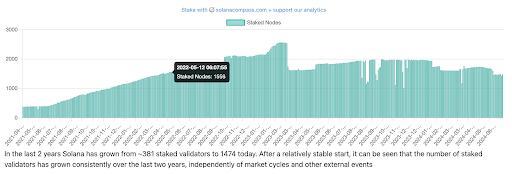

Applications started to arrive at Solana because it was lightning fast and offered much cheaper fees even compared to BNB and Polygon. And unlike BNB and Polygon, Solana managed to achieve all that while coordinating the permissionless workload across 1500–2000 staked nodes.

Source: Solana Compass

That was the first time I personally arrived at an investment thesis that the Solana community later dubbed “OPOS” — Only Possible on Solana. This pertains to a use case whose design necessitates having an architecture that is:

- Lightning fast

- Dirt cheap

- Sufficiently decentralised

- Battle tested

The “battle-tested” component has always been and remains the most important, in my view. While many chains showcase high TPS in controlled environments, the true test comes when conditions get tough, as they often do under decentralised environment with adversarial actors at play.

What happened to Solana via STEPN onboarding as well as some other consumer use cases like m2e games and general NFT craze was the first instantiation of this design being truly battle tested.

Arguably, the first battle was lost. The network degraded and remained unusable for a very long time.

But in my opinion, the investment disposition was as follows:

- The news: Solana went down under duress.

- The matter: Solana attracted duress.

News is something that attracts your attention. Matter is something that can have a long-term impact.

Prior to that, only BNB Chain and Polygon had managed to attract somewhat noticeable demand, both being the classic BFT instantiation of <100-validators-or-die. Nobody back then ever talked about what would have happened to all these PoS systems if they were hit by the STEPN adoption wave the way Solana was.

Naturally, the question emerged — are these performance issues intrinsic limitations that necessitate a complete overhaul, or can they be resolved through optimization? If the former, we’re witnessing a valiant effort and a noble failure. If the latter, we’re looking at a transformative investment opportunity, given the existence of a sufficiently decentralized network capable of processing 50 million transactions daily at a cost of mere fractions of a cent per client.

The final decision we made back then was to observe and monitor how Solana would address this situation within its ecosystem.

What followed next can be termed as “The Great Solana BUIDL Season of 2022–2023,” during which a plethora of upgrades, primitives, and optimisations were introduced. We will discuss them in details later in the series.

These advancements significantly transformed the Solana ecosystem, shaping it into what it is today (as of summer 2024, freshly after 1.18 upgrade):

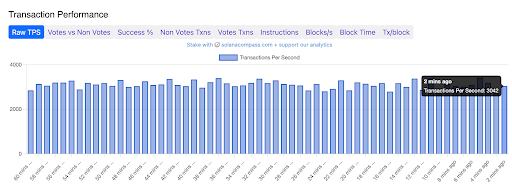

With a stable 3,000 TPS performance (Ethereum is floating around 100TPS in total with all its L2s),

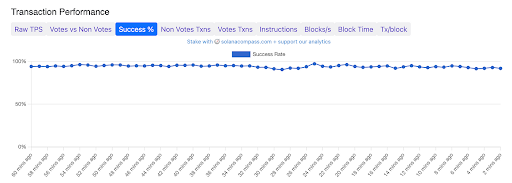

99% success rate,

and 400ms block time,

coordinating across over 5,000 nodes with 1,500 validators,

this system generates trading volume comparable to Ethereum

and rewards its validators more generously,

ultimately overthrowing the main competitor in Total Economic Value (TX fees + MEV tips to validators), as coined by Blockworks analysts.

Concluding, I would like to say again:

This traction emanates from one key feature you have to pay attention to in venture: the focus for the right use case. The most important thing is knowing what needs to be build. Then, once identified, you need a competent team that has dedication to go through the battles lost and the battles won for as long as the thesis remains not wrong.

Throughout the recent bear cycle, the Solana team has endured an unimaginable amount of criticism, being labeled as “the downtime chain”, “the degen chain”, “the NFT sandbox”, “the FTX chain”, “the Scam Bankman-Fried puppet”, “the dead chain”, “the memecoin scamfield”, and numerous other derogatory titles spread across the crypto Twitter, a level of scrutiny that no other team in the industry has had to face.

While what has actually been happening all this time was,

- the founding team challenged the first principles of blockchain design,

- gathered a community of like-minded developers

- and went on doubling down on its thesis.

What we are witnessing now is their vision being realised, with the network outperforming competitors in every meaningful metric (TVL is not a meaningful metric).

By identifying the ultimate use case, a roadmap of logically consistent optimisations has been developed, where each new solution leads to coherent progression of the initial vision. This approach contrasts with the more inconsistent development paths seen in other projects, which often jump between various strategies and narratives progressing from sharding to L2s and from global state machine to ultrasound money. This lack of focus ultimately demonstrates the absence of a concrete vision to optimize for.

In Solana’s case, the consistent optimisation path is a testament to the clarity of thought and the soundness of vision.

In the next part, we will speak a little more about the actual network design and how it challenged the contemporary proof of stake “best practices”.

We will also discuss the pivotal moments where we at DeepWaters converted the major liquid position into SOL.